Utilitarian concerns increasingly drive machine ethics, particularly the few ethical discussions in learning analytics. Determining the most good for the most people is a responsible way forward, but perhaps not the most comprehensive, especially when assessing potential outcomes. Innovations in learning analytics may occur more rapidly and with better outcomes if informed ethical discussions occur at every step of development.

James E. Willis, III is an educational assessment specialist at Purdue University.

The Failure of Ethical Discussions in Learning Analytics

Potential ethical reverberations are finding an audience in the world of learning analytics and educational data mining. Current discussions of learning analytics and ethics are problematic, however, because of their binary approach: actions are deemed either "right" or "wrong," most often in legalistic terms. As innovation continues to leap ahead, the question "what does this mean for humanity?" becomes more acute. This is especially true in learning analytics because the impact goes beyond students, professors, and administrators: the effects of predicting student outcomes and, in a way creating an environment for potential success where previously there would mostly likely have been failure, not only alters a deterministic future but also creates a generation of graduates who have benefitted from statistical regression with their data. Sharon Slade and Paul Prinsloo seek the possible institutional purposes: "to maximize the number of students reaching graduation, to improve the completion rates of students who may be regarded as disadvantaged in some way, or perhaps to simply maximize profits."1 This is no mere abstraction — student-level data is deployed across modeling to change the future, whether for the intent of producing successful graduates and/or to generate profits, and that alone raises ethical questions.

Abelardo Pardo and George Siemens recently argued, "In the digital context, we define ethics as the systematization of correct and incorrect behavior in virtual spaces according to all stakeholders."2 They go on to apply a legal framework to determine if ethical constructs fit "transparency, student control over the data, security, and accountability and assessment," as defined within legal understandings of privacy.3 Slade and Prinsloo likewise devised categories to ethically analyze problems:

- The location and interpretation of data

- Informed consent, privacy, and the deidentification of data

- The management, classification, and storage of data4

Like Pardo and Siemens, Slade and Prinsloo ground ethical discussion within and prior to legalities: "Our approach holds that an institution's use of learning analytics is going to be based on its understanding of the scope, role, and boundaries of learning analytics and a set of moral beliefs founded on the respective regulatory and legal, cultural, geopolitical, and socioeconomic contexts."5 Similarly, recent principles were affirmed by the Asilomar Convention for Learning Research in Higher Education: "Respect for the rights and dignities of learners, beneficence, justice, openness, the humanity of learning, continuous consideration."6 These principles were based on the 1973 Code of Fair Information Practices and the Belmont Report of 1979.

The current state of ethics in learning analytics and perhaps in technology writ large has a basis in utilitarianism, which says that we ought to do what causes the most good for the most people. Learning analytics draws on the lessons and advantages of business analytics to help achieve better outcomes for students; it makes sense to apply utilitarianism as the standard form of assessing the ethics of steps taken to help the most students. Perhaps this is why discussions often pivot legally — because of the inescapable connection between utilitarianism and the law; both attempt to provide a means to take individual information and serve the greater good. The implicit connection goes deeper because an individual's educational data combined with many other individuals' data predicts outcomes for others; likewise, precedent established in the law provides guidance for future decisions. Like paternalism in learning analytics (stakeholders believing they know what students need) and the rule of law, utilitarianism has pragmatic problems, especially where implementation creates conflicts between individual users and larger groups. Contextually, where ethics meet on-ground development, we cannot undo what has been done. A technology deployed for wider usage remains a permanent possibility, even if only in altered states (for example, Napster in all of its innumerable and enhanced clones). Sometimes it is impossible to determine the greater good, especially when faced with unintended outcomes, so learning analytics ought not to rely just on utilitarianism. A robust model is needed to address the speed of development.

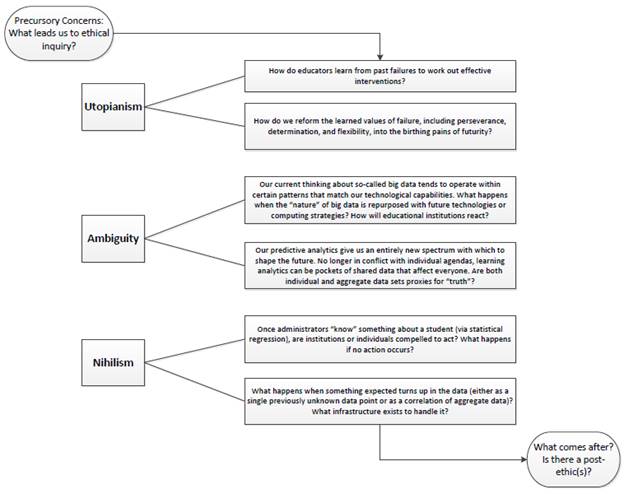

Moral Tensions and the Implementation of Learning Analytics

Modeling ethics reveals the first problem: can we establish a working model to apprise, govern, and enhance our technologies? Rather than thinking in terms of modeling, which requires meeting certain preconditions to furnish tenable outcomes, perhaps it's more fruitful to think in terms of the tensions present in our technologies. These tensions compose a fluid, unfixed set of concepts to drive questions and outcomes. Rather than a prescriptive or equation-based model, this set of tensions functions with the outside parameters of precursor concerns (What leads us to ethical inquiry? What requisite problems must be considered, and why?) and what comes after the ethical inquiry (Is there a post-ethic/s?). What comes after ethics is the potentially explosive idea of how our questions, our deeper search for connections, drive our technologies forward. In a sense, how do we create the future by establishing how we envisage it? Framing the set of tensions of moral utopianism, ambiguity, and nihilism will provide a glimpse into the future of technology and, more specifically, into the future of learning analytics.

Moral Utopianism

Moral utopianism evokes how the world ought to be, given perfect circumstances. It presupposes that people act in accordance with their consciences in a way that betters others. Applied to technology, moral utopianism would mean that our computerized machines at worst do no harm and at best provide for better environments— human, natural, and mechanized. Applied to learning analytics, moral utopianism would mean that our technologies understand what students need to learn and to thrive and provide calculated predictions to intervene meaningfully. It would also mean keeping information secure, providing accurate predictions, and assessing changes in variables rapidly. Moral utopianism leads to virtuous outcomes; in other words, two major questions might guide learning analytics:

- How do educators learn from past failures to work out effective interventions?

- How do we reform the learned values of failure, including perseverance, determination, and flexibility, into the birthing pains of futurity?

These questions appeal to past student outcomes by reshaping the perception of failure into modified methods of teaching, both from individual student data and from aggregated data. Operatively, the question pivots on how to provide students customized education in a one-size-fits-all reality; there must be room for failure to act as a method of creating success. By learning how to cope with academic difficulty, students learn "grit," or the determination to succeed despite prior problems; from failure can stem a new direction and a different future. Learning analytics must respond to such capacity to rewrite a non-deterministic future. The question then becomes how to steer students productively into areas where they can flourish academically.

Moral Ambiguity

Moral ambiguity suggests that the value of an outcome cannot always be determined and thus remains suspended indefinitely, perhaps as a result of conflicting data and undetermined directions. Applied to technology, moral ambiguity means the possibility of taking actions until legal precedent or public outcry limits them. For a recent example of moral ambiguity, consider the development of behavior-altering tracking technologies for insurance companies;7 while currently legal, the outcomes remain unknown because they could be positive (less traffic fatalities or better eating habits) or negative (inflated insurance premiums or unforeseen tracking capability).

Applied to learning analytics, moral ambiguity means current initiatives in student tracking may have unintended consequences. If the institution correlates student identification card swipes with Wi-Fi pings and this yields surprising educational results as shown in final grades, can we ascertain what, exactly, might result from such research? The neutrality in moral ambiguity is not in terms of value, but in not knowing something. Two questions emerge from moral ambiguity:

- Our current thinking about so-called big data tends to operate within certain patterns that match our technological capabilities. What happens when the "nature" of big data is repurposed with future technologies or computing strategies?

- Our predictive analytics give us an entirely new spectrum with which to shape the future. No longer in conflict with individual agendas, learning analytics can be pockets of shared data that affect everyone. Are both individual and aggregate data sets proxies for "truth?"

The potent implication with moral ambiguity and learning analytics is the reshaping of values without thoughtful reflection of what is gained and what is lost. The values are unknown, and therefore the outcomes are also unknown.

Moral Nihilism

Moral nihilism evinces utter meaninglessness and lack of value pertaining to ethics. This means that nothing is intrinsically right or wrong — any behavior is not morally right or wrong. Applied to technology, moral nihilism suggests that innovation may proceed without guidance or reflection because the ramifications or outcomes contain no value. Machinery devoid of value means that whatever future outcomes come to pass are without merit because they lack values. Applied to learning analytics, moral nihilism renders paternalism (administrators "knowing" what is best for students), utilitarianism (determining what will benefit the most number of students), and care ethics (establishing how to address and redress student concerns holistically and individually) obsolete. Furthermore, while logically learning analytics under moral nihilism would stress retention for the sake of tuition monies, even this is rendered obsolete. The possibility of moral culpability emerges because without value, established norms lack meaning. Moral culpability reduces to establishing responsibility once predictions are known:

- Once administrators "know" something about a student (via statistical regression), are institutions or individuals compelled to act? What happens if no action occurs?

- What happens when something unexpected turns up in the data (either as a single previously unknown data point or as a correlation of aggregate data)? What infrastructure exists to handle it?

Arguably, any rational or reasonable person would view moral nihilism with deep skepticism. In fact, mobile tracking capabilities, powerful regression models, and intervention at any cost speak directly to the perils of moral nihilism while existing ethical models speak to utilitarianism. Not conversant, the two concepts lead innovation and our increasingly important discussion astray.

Building Ethics into Every Step

Machine ethics, including learning analytics, stand on the cusp of moral nihilism, and yet human interaction with machines will only continue to accelerate. Now is the time to act within frameworks of human autonomy and agency. The slow speed of the law will not match the speed of innovation — and rightly so to prevent unnecessary and even stifling control. Moral frameworks have worked with varying levels of success previously, but will they continue to do so? The proposed tension model of moral utopianism, ambiguity, and nihilism helps recast innovation from its current implicit utilitarianism into a new scaffolding of possibility (figure 1). Holding utopianism, ambiguity, and nihilism together help redefine what is learned from academic failure, responsibly innovate knowing that competing values often pervade technological innovation, and push learning analytics' interventions with students away from a potentially deterministic future. While the theories of utopianism, ambiguity, and nihilism are not new, applying them to learning analytics' innovation is.

Figure 1. Proposed tension model

Learning analytics stand poised to benefit students in previously impossible ways. Alongside innovation, however, ethical discussions need probing questions, assessments of possible outcomes, and active disagreement about future developments. Ethical modeling will not achieve these, at least not in a substantive way; principled reflection needs to keep up with the speed of innovation as closely as possible. An inner matrix of tensions will achieve ethical reflection aligned with innovation — or at least get us closer to that goal. When schools or companies build new learning analytics systems, or when schools are deciding between competing products, ethical discussions ought to be in the forefront of outcomes-based commitments. The proposed tensions of utopianism (what is the very best outcome?), ambiguity (are the outcomes knowable?), and nihilism (how are unexpected outcomes handled?) can help institutions and companies fulfill the goal of assisting student success.

- Sharon Slade and Paul Prinsloo, "Learning Analytics: Ethical Issues and Dilemmas," in American Behavioral Scientist, Vol. 57, 2013, p. 1514. doi: 10.1177/0002764213479366

- Abelardo Pardo and George Siemens, "Ethical and Privacy Principles for Learning Analytics," in British Journal of Educational Technology, 2014, p. 2. doi: 10.1111/bjet.12152. Italics original.

- Ibid., 11.

- Slade and Prinsloo, "Learning Analytics," 1515.

- Ibid., 1518. Italics original.

- Mitchell L. Stevens and Susan S. Silbey, "The Asilomar Convention for Learning Research in Higher Education," June 1–4, 2014.

- Jathan Sadowski, "Insurance Vultures and the Internet of Things," June 11, 2014, The Baffler: The journal that blunts the cutting edge.

© 2014 James E. Willis, III. The text of this EDUCAUSE Review online article is licensed under the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 license.